You’re seeing a spike in traffic but no clicks, no scrolls, and barely a few seconds spent on the page. So, what gives?

Generative Engine Optimisation (GEO) has become a buzz-phrase, questioning how fund marketers are optimising their content for AI engines to crawl and understand the value of their content.

When bots behave like humans

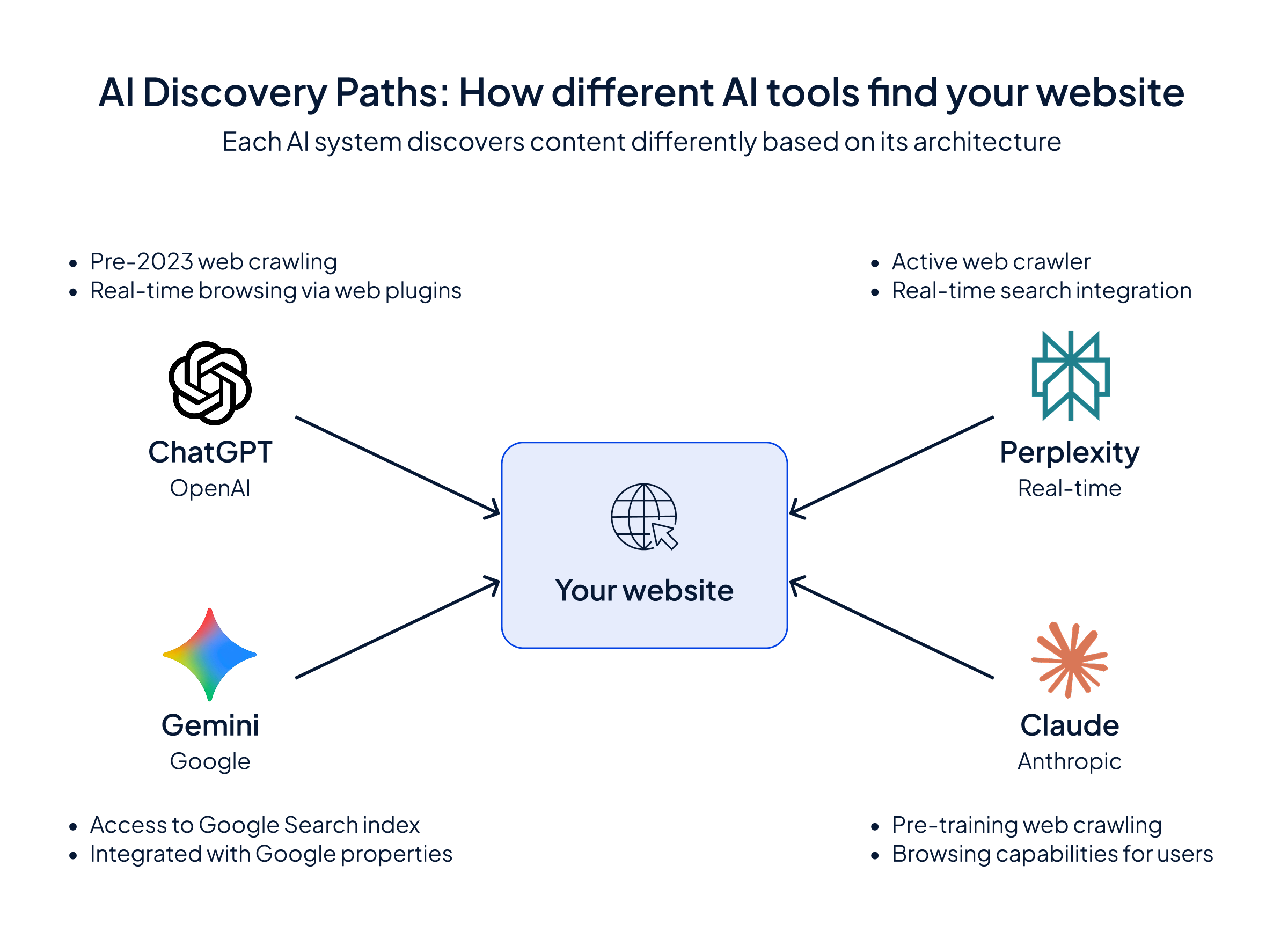

AI platforms like ChatGPT, Gemini, and Claude are now browsing your site like real users. At Kurtosys, we’ve always tracked unusual traffic patterns and threats and have observed that automated traffic has increased significantly in recent months.

(Image credit: Microsoft/Google/Anthropic)

Our bot management tools used to rely on spotting clear patterns like repeated requests from the same user agent or location and then subsequently blocking them. But AI-driven traffic is different: it’s faster, more erratic, and often bypasses traditional protections by mimicking human behaviour.

For us, the challenge isn't just about blocking bad bots; it's about discerning the valuable traffic from the noise. We have to be smart enough to filter out malicious scraping while still allowing platforms to crawl our sites in a way that benefits the end-user. It's a layered strategy that combines data collection with real-time defence.

Sunil Odedra - Chief Technology Officer, Kurtosys

Unexplained traffic: noise or opportunity?

Mysterious traffic isn’t just a challenge for tech teams managing risk, it also affects marketers trying to understand who’s engaging with their content. AI agents are now scraping sites or harvesting data to train models, blurring the line between genuine interest and background activity.

The key question: is this traffic from a human-driven AI query, or just an AI scraping your site? For marketers, the focus should be on reaching the real users behind the prompts – the ones who click through and land on your site. That’s where optimisation matters most.

The new discovery dilemma

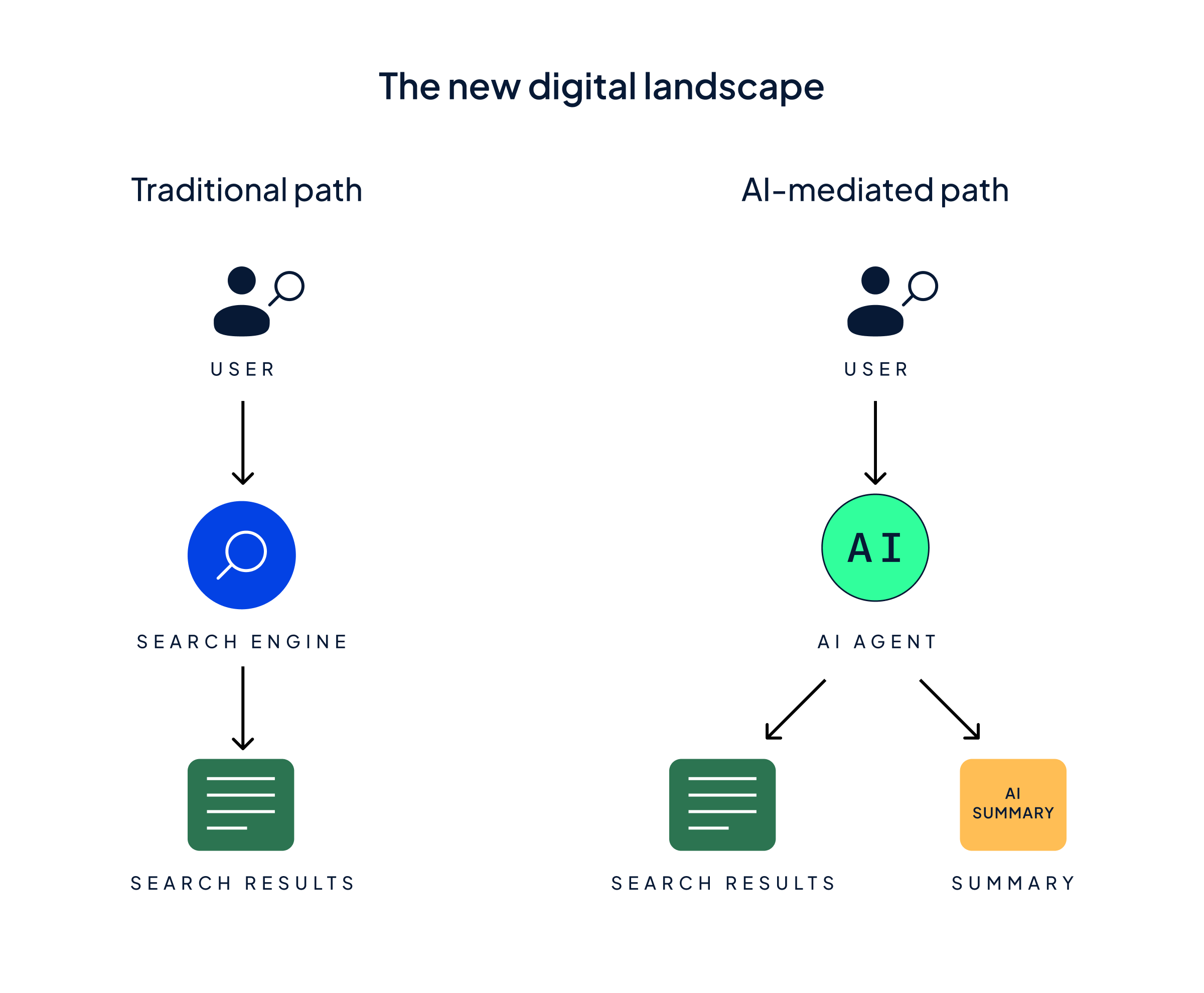

Just like with traditional search engines, AI tools are reshaping how content is found, but with a twist. Instead of ranking pages, they summarise content and answer questions without always giving credit.

This creates a new challenge: how do we ensure our content is discoverable and helpful, while still protecting our brand and its value?

Key insight: User query type determines which AI tool discovers your content and how it’s presented. Factual queries vs. opinion requests vs. research questions result in different discovery paths.

Here are the facts

Cloudflare reports that 1% of traffic is AI based, with over 50 billion requests a day.* 40% of the top 1 million sites are estimated to have been crawled by AI providers and that number is constantly growing. We’ve seen approximately 5.6% of traffic in the last 30 days being AI-based, up from 1.3% in the previous period.

Spotting the signs of AI traffic

To identify AI-driven visits, we recommend using Google or Adobe Analytics to check for very short session lengths or single-page bounces. Non-human patterns – like rapid, consistent clicks with no idle time are also red flags. When spotted, rate limiting is often used before resorting to full blocks or challenges.

Kurtosys use JA3 fingerprints to detect bots that disguise themselves by changing things like their user agent. Many AI content bots ignore rules in your robots.txt file, and some, like Perplexity – try to bypass security and appear human. As bots get smarter, so do defences. For example, Cloudflare’s new “AI labyrinth” traps bots in endless junk content instead of blocking them. But this can conflict with the goal of supporting valuable, user-driven traffic, also called GEO.

As threat actors turn to AI, we’re using AI ourselves. It monitors and neutralises risks like undesirable web traffic flows in real time. At the same time, it helps us safeguard and maintain legitimate business automation.

Harry Thompson - Chief Risk Officer, Kurtosys

In short: yes, analytics help, but tackling AI traffic effectively requires a layered strategy – from data collection to real-time optimisation.

When a user clicks through from AI-generated content to your site, they often have high intent to learn more about your fund. You can track this traffic using tools like Adobe or Google Analytics by looking for UTM parameters (e.g. ?utm-source=chatgpt.com) or referral sources like ChatGPT, Gemini, or Claude.ai.

This helps you see which content is driving AI Optimisation (AIO) and where users are landing. With this insight, you can personalise the experience using tools like Adobe Target or Interaction Studio.

Just note: not all bots follow UTM or robots.txt rules, so tracking isn’t foolproof.

The key point: AI-referred users may be starting a new kind of customer journey-one that begins with a model, not a search engine. That changes how we think about content, brand presence, and even who owns the data.

Our own website often acts as a real-time lab for our solutions. By working closely with our engineering and product teams to analyse evolving AI traffic patterns, we exchange firsthand knowledge that directly informs how we design tools to help our clients with their own digital challenges.

Anneliese Thomas - Head of Marketing, Kurtosys

Summary:

- Overall, there’s lot more work coming your way

- You have new marketing audiences to serve – Human users using AI & AI

- It is important to ensure your websites are discoverable on these platforms using GEO principles

- When they can visit your website they can be discovered on the servers and in Adobe or Google Analytics – talk to your tech teams

- Ideate with your teams on the personalisation for such users based on the contact viewed.

FAQs

- What is Generative Engine Optimisation (GEO) and why should marketers care?

GEO is the practice of optimising your content so AI engines can correctly crawl, understand, and present your information. For fund marketers, GEO helps ensure that your content remains discoverable and valuable in AI-driven search results.

- How is AI traffic different from traditional bot traffic?

Traditional bots were easier to spot, they often came from the same location, used predictable patterns, and could be blocked outright. AI-driven traffic, however, mimics human behaviour, moves faster, and can bypass traditional protections, making it harder to detect and manage.

- How can I spot AI-driven visits on my site?

Look for patterns like ultra-short session lengths, single-page bounces, and rapid, consistent clicks with no idle time. Tools like Google Analytics or Adobe Analytics can help, and advanced methods like JA3 fingerprinting detect bots that disguise themselves by changing user agents.

- Can AI-driven traffic be managed without losing valuable visitors?

Yes. The goal isn’t just to block bots, it’s to filter out malicious scraping while allowing beneficial AI crawling that supports end-user discovery. This requires a layered defence, from rate limiting to advanced security tools, balanced with GEO strategies to attract genuine users.

- Are there legal or compliance concerns with AI scraping my site?

Possibly. In regulated industries, certain scraped data could create privacy or IP concerns. While some AI providers respect robots.txt, many do not, so it’s worth reviewing your compliance stance and security measures. - Should we block AI crawlers in our robots.txt file?

Blocking is possible, but it’s a trade-off. It may reduce unwanted scraping, but you could also lose exposure in AI-generated search results. Many organisations are choosing selective blocking, allowing beneficial AI crawlers while blocking malicious ones.

- What steps should marketing and tech teams take together to manage AI traffic?

Align on a joint strategy: tech teams can filter and manage AI traffic, while marketing teams adjust GEO strategies to maximise the value of genuine AI-driven visits.